Selected Projects

Meta-learning

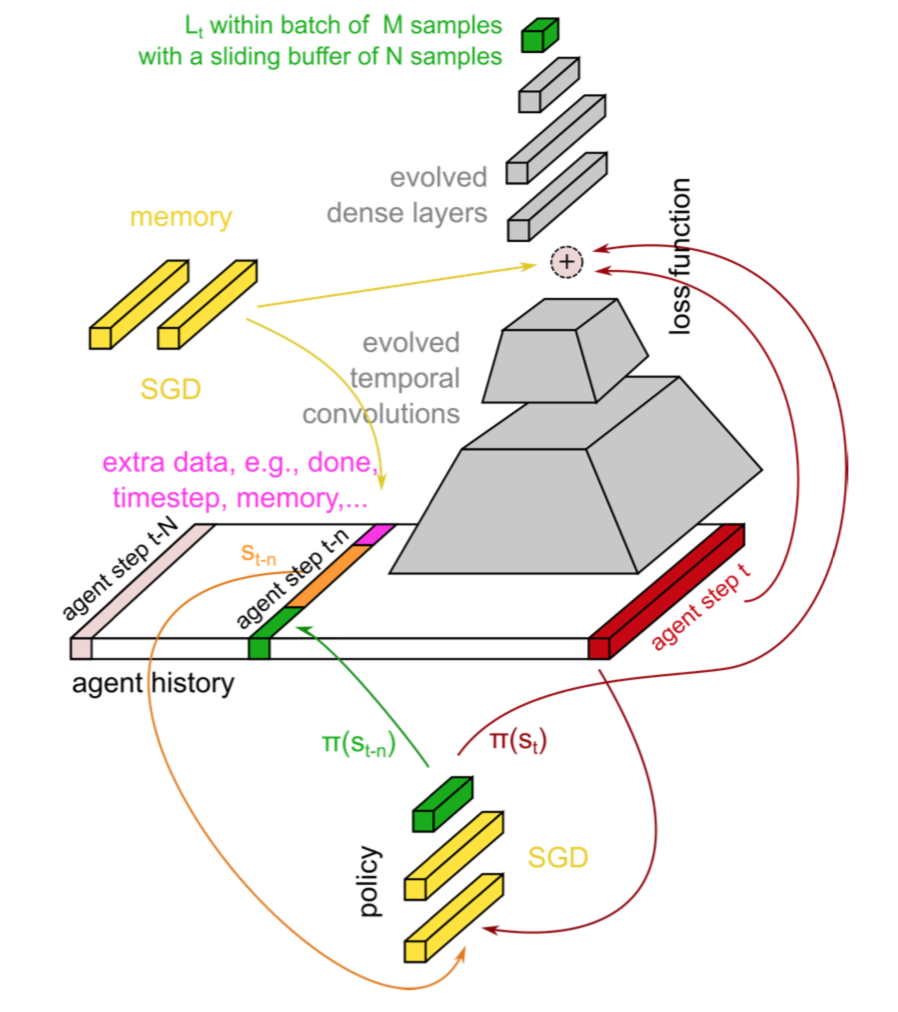

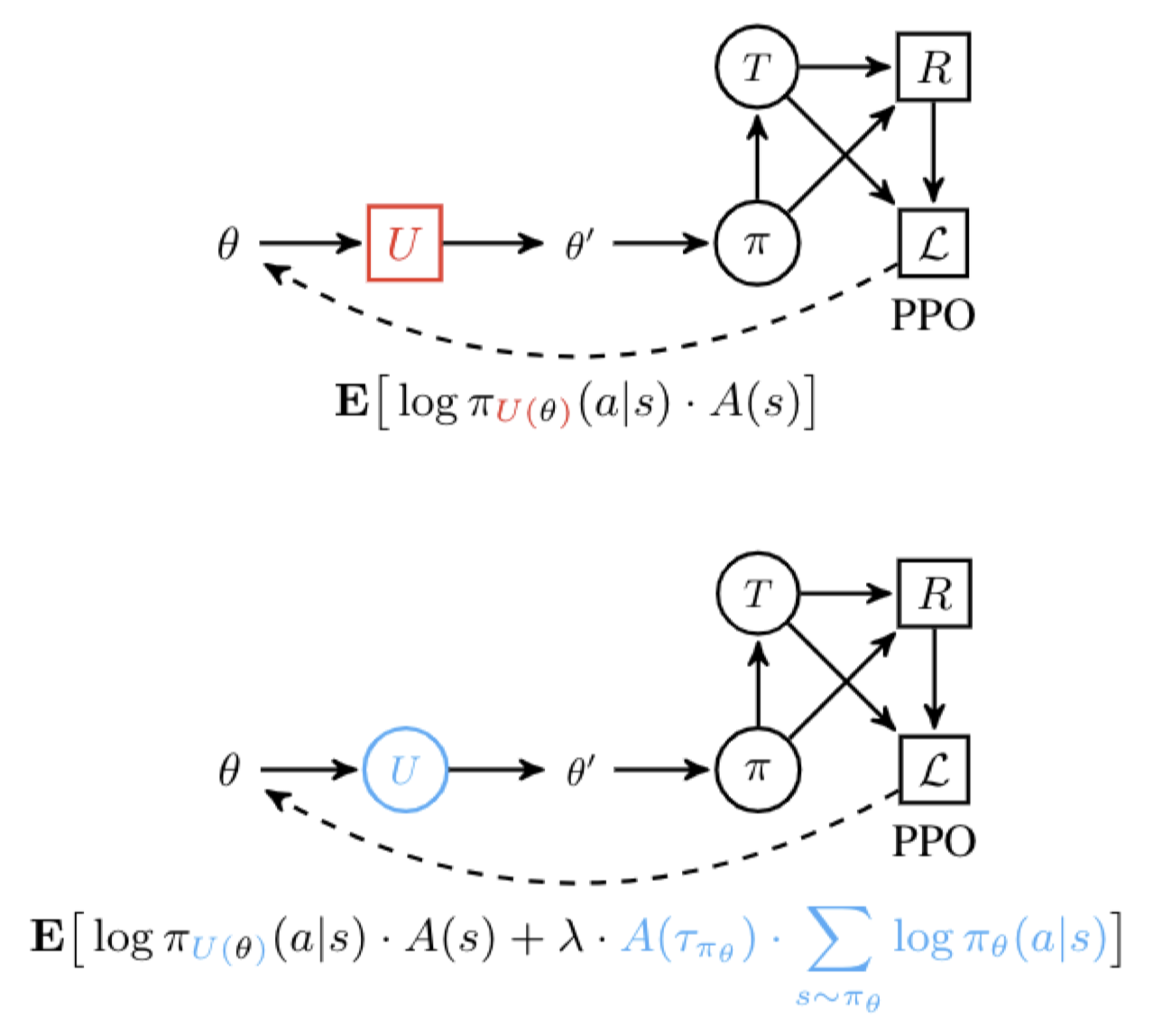

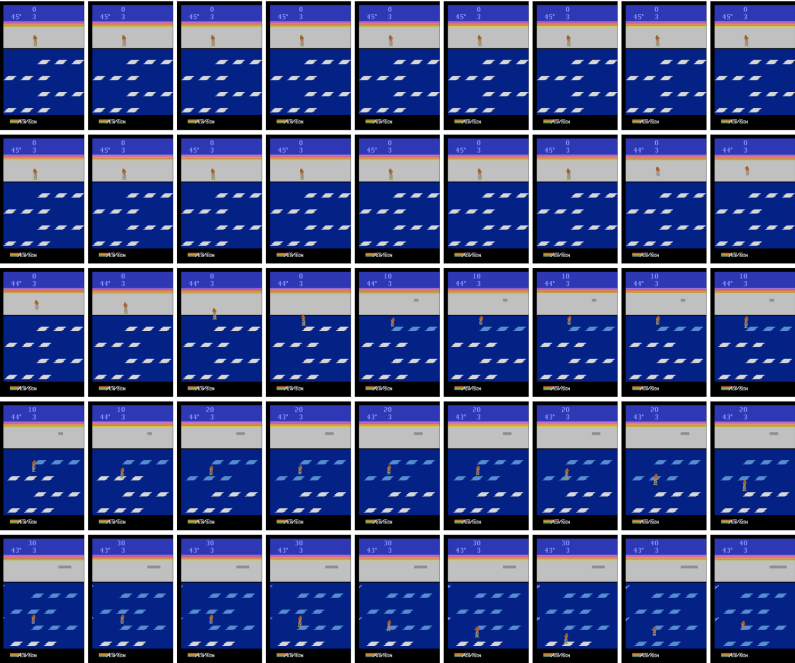

Learning to learn in deep reinforcement learning (RL), including learning to explore without the use of additional structures. Below a video of a robot learning to grasp from scratch without simulator resets and a robot learning to walk towards targets out of training distribution, using Evolved Policy Gradients (EPG).

|

Evolved Policy Gradients Advances in Neural Information Processing Systems (NeurIPS), 2018 R. Houthooft, R. Y. Chen, P. Isola, B. C. Stadie, F. Wolski, J. Ho, P. Abbeel |

|---|

|

Some Considerations on Learning to Explore via Meta-Reinforcement Learning Advances in Neural Information Processing Systems (NeurIPS), 2018 B. C. Stadie, G. Yang, R. Houthooft, X. Chen, Y. Duan, W. Yuhuai, P. Abbeel, I. Sutskever |

|---|

Deep Reinforcement Learning

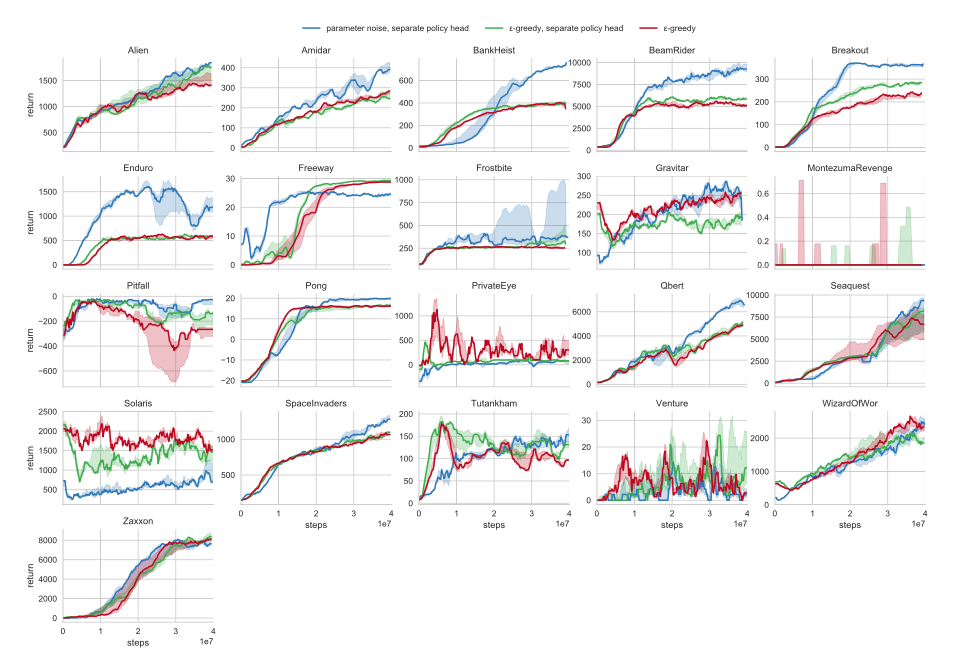

Reinforcement learning (RL) using nonlinear function approximators with a focus on continuous control tasks such as robot locomotion. In particular, the goal is to investigate how to achieve efficient exploration in deep RL through curiosity. This research was performed in collaboration with OpenAI and the Berkeley AI Research lab.

|

Parameter Space Noise for Exploration International Conference on Learning Representations (ICLR), 2018 M. Plappert, R. Houthooft, P. Dhariwal, S. Sidor, R. Y. Chen, X. Chen, T. Asfour, P. Abbeel, M. Andrychowicz |

|---|

|

#Exploration: A Study of Count-Based Exploration for Deep Reinforcement Learning Advances in Neural Information Processing Systems (NIPS), 2017 H. Tang, R. Houthooft, D. Foote, A. Stooke, X. Chen, Y. Duan, J. Schulman, F. De Turck, P. Abbeel |

|---|

|

VIME: Variational Information Maximizing Exploration Advances in Neural Information Processing Systems (NIPS), 2016 R. Houthooft, X. Chen, Y. Duan, J. Schulman, F. De Turck, P. Abbeel |

|---|

|

Benchmarking Deep Reinforcement Learning for Continuous Control International Conference on Machine Learning (ICML), 2016 Y. Duan, X. Chen, R. Houthooft, J. Schulman, P. Abbeel |

|---|

Generative Models

InfoGAN is a generative adversarial network that also maximizes the mutual information between a small subset of the latent variables and the observation. We derive a lower bound of the mutual information objective that can be optimized efficiently.

|

InfoGAN: Interpretable Representation Learning by Information Maximizing Generative Adversarial Nets Advances in Neural Information Processing Systems (NIPS), 2016 X. Chen, Y. Duan, R. Houthooft, J. Schulman, I. Sutskever, P. Abbeel |

|---|

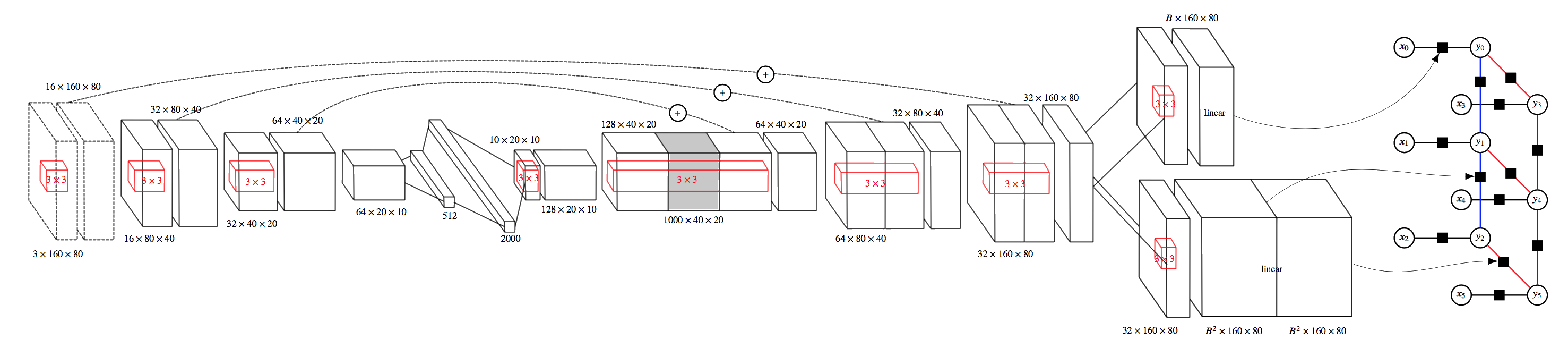

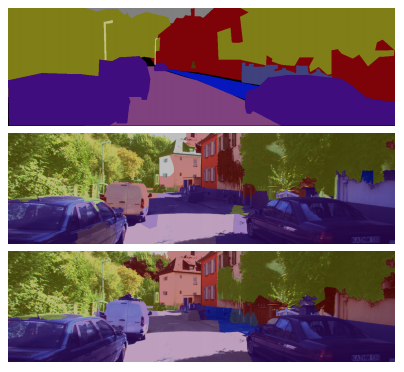

Structured Prediction and Deep Learning

As part of an autonomous vehicle project, the goal was to combine structured output prediction and deep learning techniques, with a particular focus on semantic image segmentation. Structural support vector machines (SSVMs) were extended to allow for highly nonlinear factors. This can enhance output coherence of deep predictive models, while still allowing for end-to-end training. Below the architecture of a deep SSVM with convolutional neural factors is pictured.

|

Integrated Inference and Learning of Neural Factors in Structural Support Vector Machines* Pattern Recognition, vol. 59, 2016 R. Houthooft, F. De Turck |

|---|

* This work is part of an applied research project in collaboration with Case New Holland (CNH) Industrial. As such several methods, models, datasets, and results could not be publicly released due to confidentiality agreements. An addendum to these papers can be found here. Initial vehicle controller patent applications have been filed.

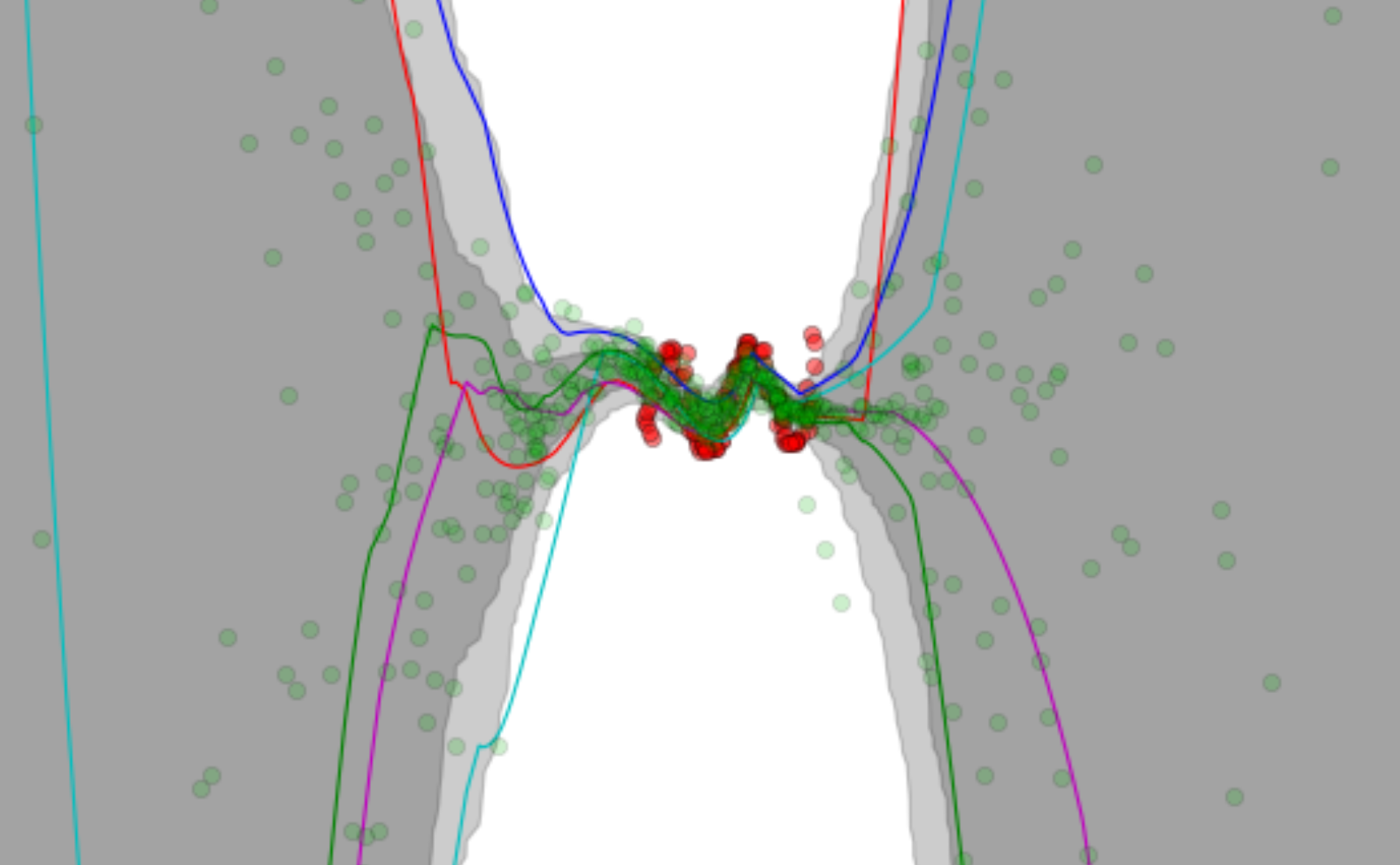

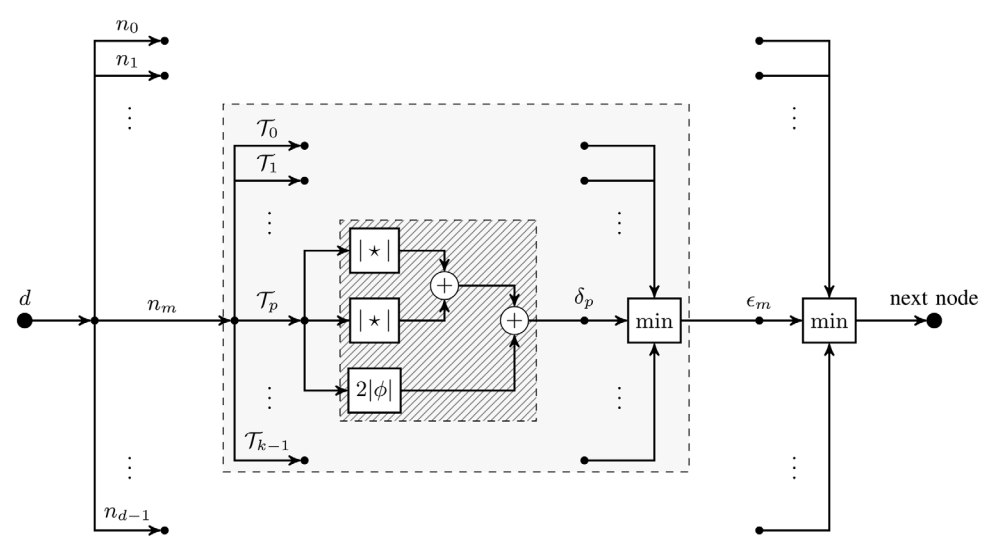

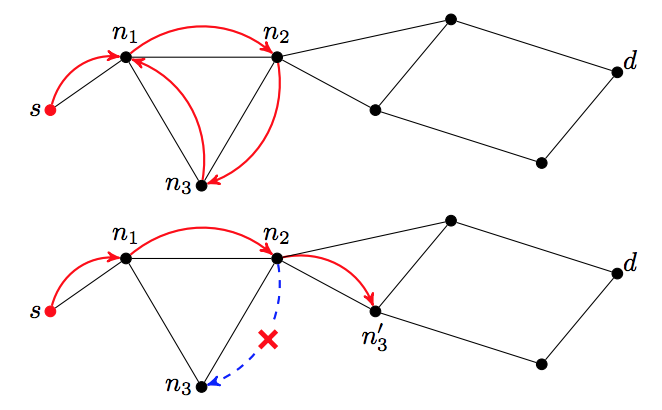

Network Science

My research originally focused on the development of a novel routing algorithm called Forest Routing. Through geometric routing, using a set of graph embeddings in a particular mathematical space, it offers both high scalability and native load balancing behavior. A coherent write-up on the subject can be found in my thesis Adaptive Geometric Routing for the Internet Backbone. Below a demonstration of the developed model is shown.

|

Optimizing Robustness in Geometric Routing via

Embedding Redundancy and Regeneration Networks, vol. 66, no. 4, 2015 R. Houthooft, S. Sahhaf, W. Tavernier, F. De Turck, D. Colle, M. Pickavet |

|---|

|

Robust Geometric Forest Routing with Tunable Load Balancing The 34th Annual IEEE International Conference on Computer Communications (INFOCOM), 2015 R. Houthooft, S. Sahhaf, W. Tavernier, F. De Turck, D. Colle, M. Pickavet |

|---|

|

Fault-Tolerant Greedy Forest Routing for Complex Networks The 6th International Workshop on Reliable Networks Design and Modeling (RNDM), 2014 — best paper award Featured in Global Communications Newsletter May 2015 R. Houthooft, S. Sahhaf, W. Tavernier, F. De Turck, D. Colle, M. Pickavet |

|---|